As you launch on developing innovative solutions, you’ll need to harness the power of data to drive your applications. You’ll discover how to leverage AI models to extract insights and make informed decisions. Your journey will involve exploring the complexities of data-driven applications, and you’ll learn how to navigate the process with ease. You’ll gain a deeper understanding of AI models and their role in shaping your application’s success.

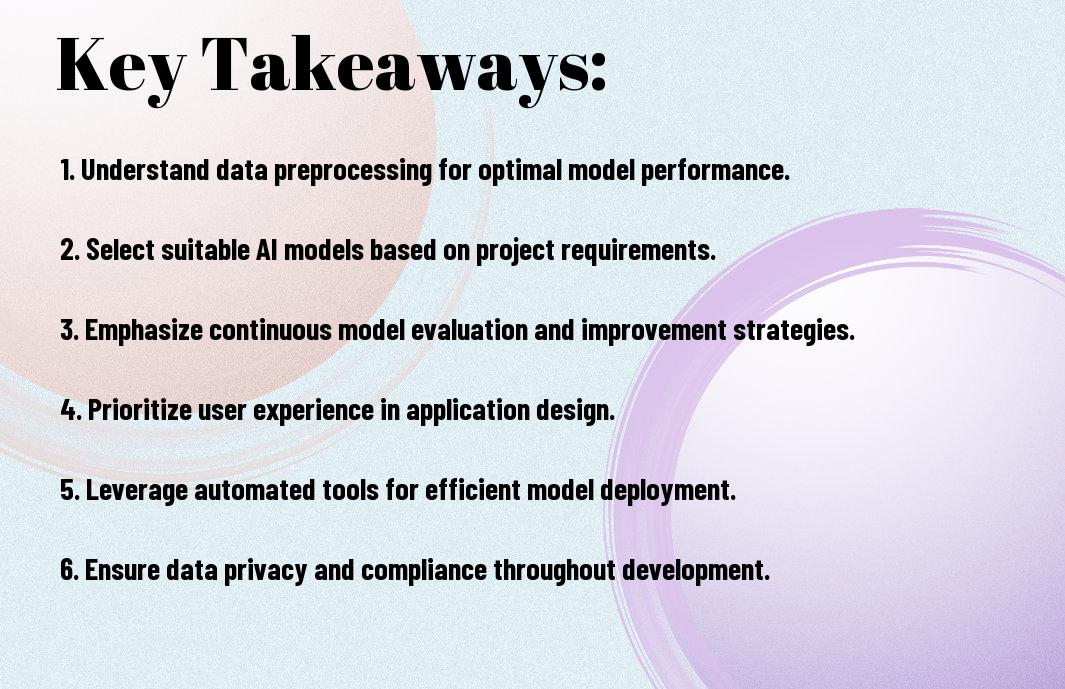

Key Takeaways:

- Developing data-driven applications requires a thorough understanding of AI models and their applications, including supervised, unsupervised, and reinforcement learning techniques to drive business decisions and improve outcomes.

- Effective data preparation is imperative for building accurate AI models, involving data cleaning, feature engineering, and data transformation to ensure high-quality input and reliable output.

- Choosing the right AI model for a specific problem involves considering factors such as data size, complexity, and type, as well as the desired outcome, to select the most suitable algorithm and optimize performance.

- Model evaluation and validation are vital steps in the development process, using metrics such as accuracy, precision, and recall to assess performance and identify areas for improvement.

- Continuous monitoring and updating of AI models is necessary to adapt to changing data distributions and maintain optimal performance, ensuring that data-driven applications remain effective and reliable over time.

Data Architecture Foundations

The foundation of a data-driven application lies in its data architecture, which you will design to support your AI models. You need to consider the flow of data, from collection to storage and processing, to ensure seamless integration with your application.

Data Collection Systems

Above all, your data collection systems should be able to handle large volumes of data from various sources, which you can then use to train your AI models. You will need to design a system that can collect, process, and transmit data efficiently.

Storage Infrastructure

Driven by data, your storage infrastructure should be scalable and secure, allowing you to store and manage large amounts of data. You will need to consider factors such as data redundancy, backup, and recovery to ensure your data is safe and accessible.

And as you design your storage infrastructure, you should also consider the type of data you will be storing, such as structured or unstructured data, and the level of accessibility you need, to ensure that your storage solution meets your specific needs and supports your AI models effectively, allowing you to make the most of your data.

AI Model Selection

While choosing the right AI model for your application, you need to consider several factors, including data type, complexity, and desired outcome, to ensure you make an informed decision that aligns with your project goals.

Supervised Learning Frameworks

Between the various options available, you will find that supervised learning frameworks are ideal for applications where your data is labeled, allowing you to train models that can make accurate predictions based on your input data.

Neural Network Architectures

Networking through the complexities of neural networks, you will discover a wide range of architectures, each designed to tackle specific tasks, such as convolutional neural networks for image processing or recurrent neural networks for sequential data.

Selection of the appropriate neural network architecture for your application depends on your specific needs, and you should consider factors such as data dimensionality, model complexity, and computational resources to ensure optimal performance and efficiency, allowing you to build a robust and reliable model that meets your requirements.

Model Training Pipeline

After defining your AI model’s architecture, you’ll need to focus on the model training pipeline, which involves a series of steps to prepare your data and train your model. This pipeline is necessary to ensure your model learns from your data effectively.

Data Preprocessing Methods

Preceding the training process, you’ll need to apply data preprocessing methods to clean and transform your data into a suitable format for your model, allowing you to extract relevant features and improve your model’s performance.

Training Optimization Techniques

Against the backdrop of complex models and large datasets, you’ll need to employ training optimization techniques to improve your model’s efficiency and accuracy, such as regularization and hyperparameter tuning, to achieve optimal results.

Also, as you investigate deeper into training optimization techniques, you’ll discover various methods to fine-tune your model, including batch normalization, dropout, and learning rate schedulers, which will help you navigate the complexities of model training and achieve state-of-the-art performance, allowing you to make the most of your data and build a robust AI model that meets your needs.

Integration Strategies

For a seamless integration of AI models into your application, you need to consider various strategies that cater to your specific requirements. You will explore different approaches to integrate AI models, enabling you to make informed decisions about your application’s architecture.

API Development

Integrating AI models through APIs allows you to leverage their capabilities in your application, enabling you to access pre-trained models and integrate them into your system. You can use APIs to simplify the integration process, making it easier to incorporate AI-driven functionality into your application.

Microservices Implementation

The microservices architecture enables you to break down your application into smaller, independent services, allowing you to integrate AI models more efficiently. You can deploy AI models as separate microservices, making it easier to manage and update them without affecting the entire application.

In addition to the benefits of microservices, you can also use containerization to ensure consistency and reliability across different environments, making it easier to deploy and manage your AI models. You can use tools like Docker to containerize your AI models, enabling you to deploy them in a scalable and efficient manner, and ensuring that your application remains performant and responsive.

Performance Optimization

All data-driven applications require optimization to ensure seamless execution. You can learn more about optimizing AI models by visiting A Deep Dive into AI Inference and Solution Patterns | Blog to improve your application’s performance.

Scalability Considerations

On the path to building a data-driven application, you will encounter scalability challenges. You need to consider these factors to ensure your application can handle increased traffic and data.

Resource Management

Against the backdrop of growing application demands, you must manage resources effectively. You should allocate resources wisely to optimize performance and minimize costs.

Performance of your application is heavily dependent on resource management. You will need to monitor and adjust resource allocation regularly to ensure your application is running smoothly and efficiently, allowing you to make the most of your resources and improve your overall application performance.

Security Measures

Keep your data-driven application secure by implementing robust security measures to protect your AI models and data from unauthorized access and breaches.

Data Protection Protocols

On the path to securing your application, you’ll need to establish data protection protocols that ensure the confidentiality, integrity, and availability of your data, allowing you to maintain control over your sensitive information.

Model Security Guidelines

One of the key aspects of securing your AI models is to follow established guidelines that outline best practices for model development, deployment, and maintenance, helping you to minimize potential risks and vulnerabilities.

Guidelines for model security are designed to help you protect your AI models from attacks and breaches, and as you implement these guidelines, you’ll be able to ensure the reliability and trustworthiness of your models, which is vital for maintaining the integrity of your data-driven application, and you can achieve this by regularly updating and monitoring your models, as well as using secure deployment and serving techniques.

Final Words

From above, you have gained a comprehensive understanding of building data-driven applications with AI models. You now know how to harness the power of AI to drive your business forward. With your newfound knowledge, you can develop innovative solutions that unlock new opportunities and drive growth. You will be able to make informed decisions about your AI strategy, and your applications will be more efficient, effective, and scalable, giving you a competitive edge in the market.

FAQ

Q: What are the key components of building data-driven applications, and how do AI models contribute to their development?

A: Building data-driven applications involves several key components, including data collection, data processing, and data analysis. AI models play a significant role in this process by enabling the analysis and interpretation of complex data sets. These models can be used for predictive analytics, pattern recognition, and decision-making, allowing developers to create applications that can learn and adapt to user behavior. By leveraging AI models, developers can create more intelligent and responsive applications that provide personalized experiences for users.

Q: What are some common challenges that developers face when integrating AI models into their applications, and how can they be addressed?

A: Developers often face challenges such as data quality issues, model training and deployment, and ensuring the explainability and transparency of AI-driven decisions. To address these challenges, developers can focus on data preprocessing and validation, use techniques such as data augmentation and transfer learning to improve model performance, and implement model interpretability techniques such as feature attribution and model explainability. Additionally, developers can use cloud-based services and frameworks that provide pre-built AI models and automated machine learning capabilities to simplify the development process.

Q: How can developers ensure that their AI-powered applications are fair, transparent, and accountable, and what are some best practices for deploying AI models in production environments?

A: To ensure that AI-powered applications are fair, transparent, and accountable, developers can implement techniques such as bias detection and mitigation, model interpretability, and transparency in decision-making processes. Best practices for deploying AI models in production environments include monitoring model performance and data drift, using continuous integration and continuous deployment (CI/CD) pipelines, and implementing robust testing and validation procedures. Developers can also use techniques such as model ensemble and stacking to improve model performance and robustness, and use cloud-based services that provide automated model monitoring and maintenance capabilities to ensure that their AI-powered applications are reliable and trustworthy.